Sum of Squared Errors

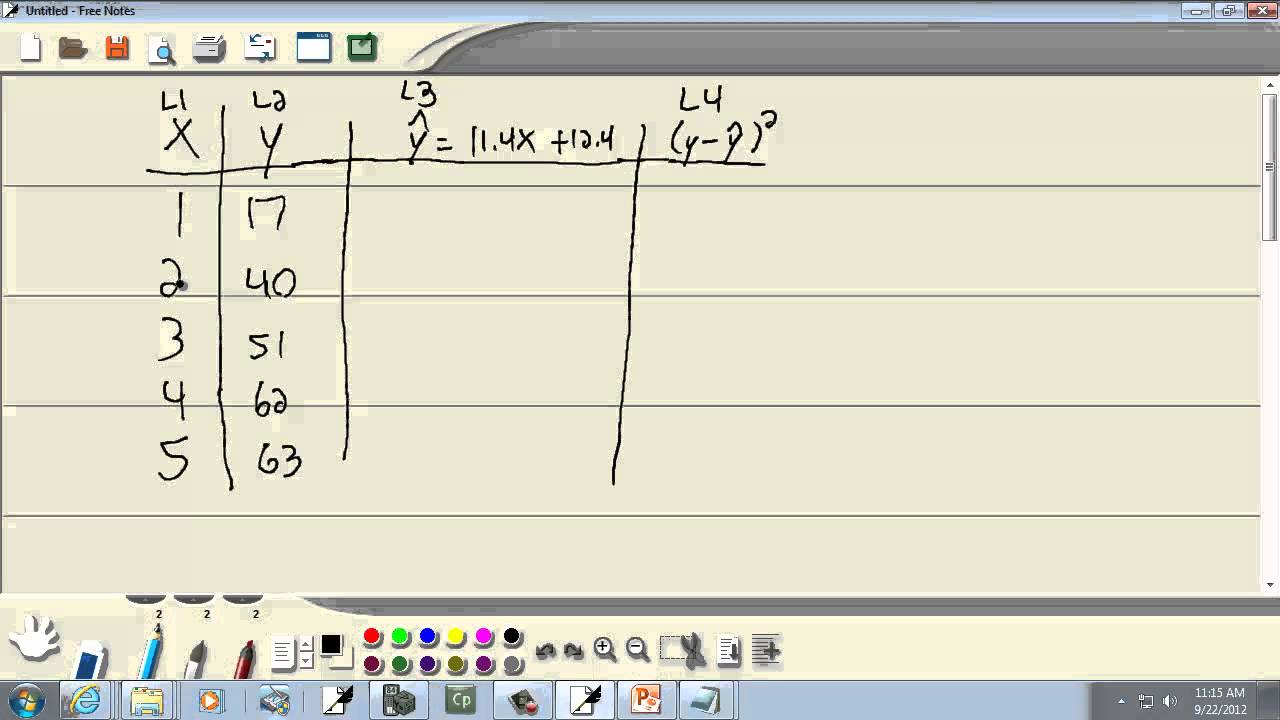

Suppose we have below values for x and y and we want to add the R squared value in regression. It is a measure of the discrepancy between the data and an estimation model such as a linear regressionA small RSS indicates a.

Forecasting Techniques Data Science Science Infographics Forecast

But if the algorithm guesses 236 then the errors are 002 the squared errors are 004 and the MSE is a higher 1333.

. Youll also learn how to troubleshoot trace errors and fix problems. Popt array Optimal values for the parameters so that the sum of the squared residuals off. Perf ssenettyewNameValue has two optional function parameters that set the regularization of the errors and the normalizations of the outputs and targets.

Data for R squared. In order to calculate R squared we need to have two data sets corresponding to two variables. R squared can then be calculated by squaring r or by simply using the function RSQ.

Psuedo r-squared for logistic regression. To compute one standard deviation errors on the parameters use perr npsqrtnpdiagpcov. Repeat that for all observations.

The overloads with a template parameter named ExecutionPolicy report errors as follows. You need to get your data organized in a table and then perform some fairly simple calculations. It measures performance according to the sum of squared errors.

One way to express R-squared is as the sum of squared fitted-value deviations divided by the sum of squared original-value deviations. Squared deviations from the mean SDM result from squaring deviationsIn probability theory and statistics the definition of variance is either the expected value of the SDM when considering a theoretical distribution or its average value for actual experimental dataComputations for analysis of variance involve the partitioning of a sum of SDM. In this accelerated training youll learn how to use formulas to manipulate text work with dates and times lookup values with VLOOKUP and INDEX MATCH count and sum with criteria dynamically rank values and create dynamic ranges.

The sum of squared errors or SSE is a preliminary statistical calculation that leads to other data values. The estimates of the beta coefficients are the values that minimize the sum of squared errors for the sample. How the sigma parameter affects the estimated covariance depends on absolute_sigma argument as described above.

In ordinary least square OLS regression the R2 statistics measures the amount of variance explained by the regression model. Sample data for R squared value. MSE is sensitive to outliers.

A residual sum of squares RSS is a statistical technique used to measure the amount of variance in a data set that is not explained by the regression model. For instance check this URL out. The protection that adjusted R-squared and predicted R-squared provide is critical because.

Sse is a network performance function. R-squared tends to reward you for including too many independent variables in a regression model and it doesnt provide any incentive to stop adding more. When you have a set of data values it is useful to be able to find how closely related those values are.

SS represents the sum of squared differences from the mean and is an extremely important term in statistics. If execution of a function invoked as part of the algorithm throws an exception and ExecutionPolicy is one of the standard policies. Errors mainly refer to difference between actual observed sample.

The sum of the squared deviations X-Xbar² is also called the sum of squares or more simply SS. It measures performance according to the sum of squared errors. A b b b a a void print_sum_squared long const num std.

Adjusted R-squared and predicted R-squared use different approaches to help you fight that impulse to add too many. Then sum all of those squared values and divide by the number of observations. The exact formula for this is given in the next section on matrix notation.

Normally distributed errors with constant variance 2 fits a simple linear model to the data and 3 reports the R-squared. The letter b is used to represent a sample estimate of a beta coefficient. The value of R2 ranges in 0 1 with a larger value indicating more variance is explained by the model higher value is betterFor OLS regression R2 is defined as following.

To find the MSE take the observed value subtract the predicted value and square that difference. Residual Sum Of Squares - RSS. In statistics the residual sum of squares RSS also known as the sum of squared residuals SSR or the sum of squared estimate of errors SSE is the sum of the squares of residuals deviations predicted from actual empirical values of data.

But be aware that Sum of Squared Errors SSE and Residue Sum of Squares RSS sometimes are used interchangeably thus confusing the readers. Strictly speaking from statistic point of views Errors and Residues are completely different concepts. Notice that the numerator is the sum of the squared errors SSE which linear regression minimizes.

The third column represents the squared deviation scores X-Xbar² as it was called in Lesson 4. The smaller the MSE the better the models performance.

Statistics 101 Linear Regression Understanding Model Error Youtube Linear Regression Regression Understanding

Elementary Statistics Finding The Sum Of The Squared Residuals On Ti 83 84 Study Skills Elementary Science Elementary

Ols Also Known As Linear Least Squares Ols Is A Method For Estimating Unknown Parameters Ols Is Simplest Methods Of Linear Regression Ols Goal Is To Closely Fi

Brushing Up On R Squared Sum Of Squares Coefficient Of Determination Crash Course

How To Calculate Sum Of Squares Sum Of Squares Sum Standard Deviation

0 Response to "Sum of Squared Errors"

Post a Comment